According to a former Google executive, technological uniqueness is coming. And, what’s more, he says it represents a great threat to humanity.

Mo Gawdat, the former chief commercial officer of Google’s Moonshot organization, which was called Google X at the time, issued his warning in a new interview with The Times.

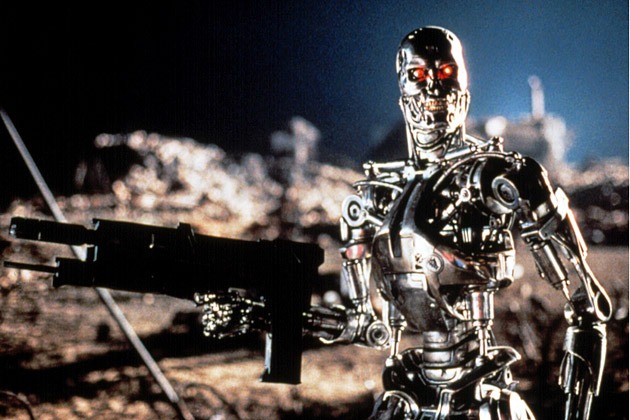

In the interview, he said that he believes that general artificial intelligence (AGI), the kind of all-powerful, sensitive AI seen in science fiction – like the Terminator’s Skynet – is inevitable and that once it’s here, humanity it may very well begin to contemplate an apocalypse caused by divine machines.

Gawdat acknowledged that he had his terrifying reveal while working with artificial intelligence developers at Google X, who were building robotic arms capable of finding and picking up a small sphere. After a period of slow progress, an arm grabbed the ball and appeared to hold it towards the investigators in a gesture that, to him, seemed to be showing off.

And suddenly I realized that this is really scary. It completely paralyzed me, ”Gawdat said. “The reality is that we are creating God.”

AI Might Be The Real Danger

There is no shortage of AI fear promoters in the tech industry. For example, Elon Musk himself – currently the world’s richest man – has repeatedly warned the world about the dangers of AI one day conquering humanity. But that kind of speculative perspective overlooks the real dangers and harms related to AI that we’ve already built.

For example, facial recognition and predictive surveillance algorithms have caused real harm in underserved communities. Countless algorithms continue to propagate and encode institutional racism across the board. Those are problems that can be solved through human supervision and regulation.

However, Gawdat thinks that the development of AI will inevitably lead to a point where it becomes a kind of deity, on which future humans will depend to the point where it can no longer be controlled and, in turn, Instead, control us or we can say will become “God”